Getting Started with Fine-Tuning:

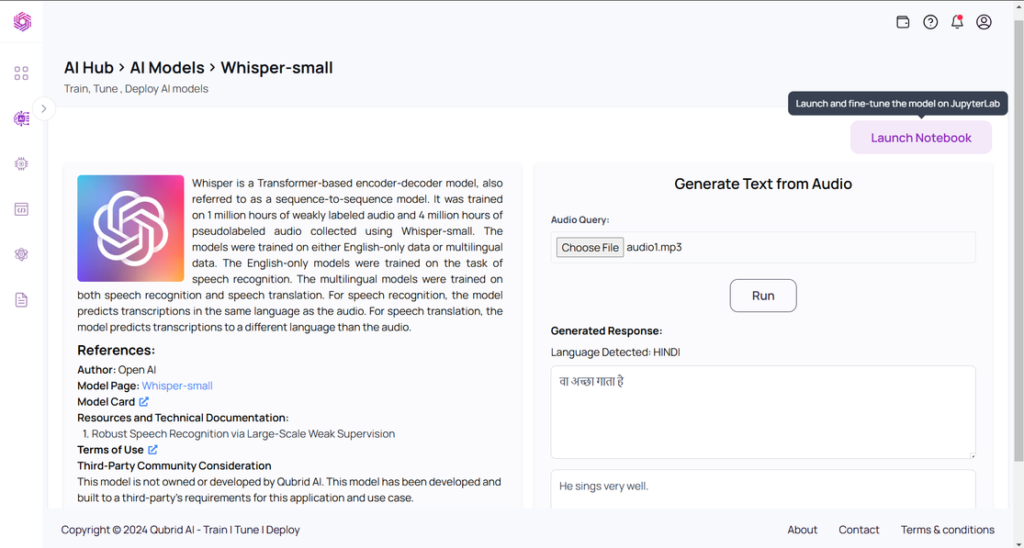

Let’s roll up our sleeves and dive into the Qubrid AI platform. Whether you’re a seasoned AI practitioner or a newcomer to the field, Qubrid AI’s intuitive interface makes fine-tuning a breeze. Begin by navigating to the Qubrid AI Hub section. Here, you’ll find a range of pre-trained models ready for fine-tuning to fit your specific needs. For the purpose of this guide, we’ll be selecting the whisper-small model as our base. However, rest assured that the steps outlined here apply to fine-tuning any AI model on the Qubrid AI platform.

For a more detailed guide on navigating the Qubrid AI Platform, check out the Qubrid Platform Getting Started Guide.

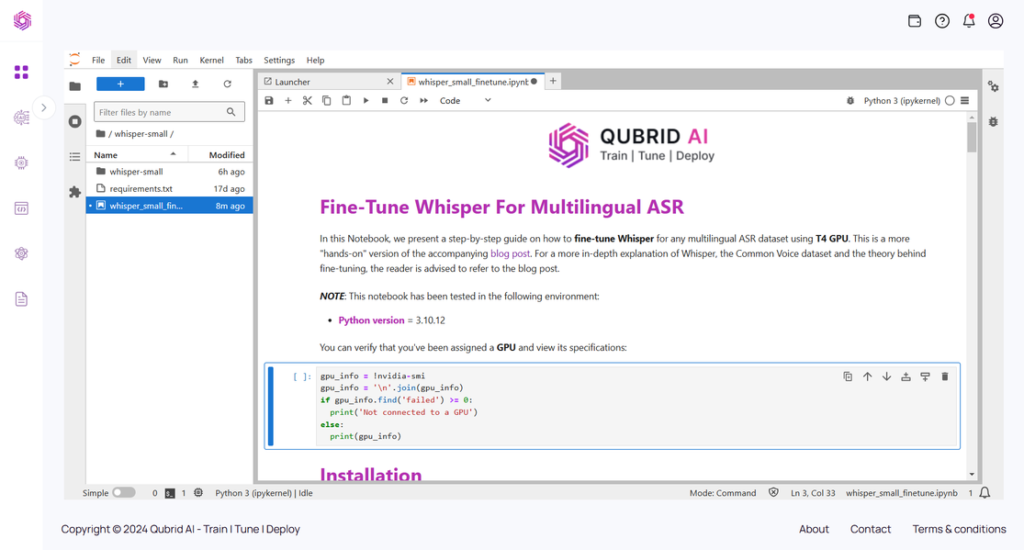

After launching the notebook on the Qubrid Platform as shown in above Figure1, you will be presented with a JupyterLab environment equipped with several essential tools and resources to facilitate the fine-tuning process.

Here’s what you will find inside Jupyter Lab:

- GPU for Training

- Pre-Trained Model

- Fine-Tuning Sample Notebook

- Pre-requisite Packages

By providing these tools and resources, the Qubrid AI Platform simplifies the process of fine-tuning the Whisper model, allowing you to focus on optimizing the model’s performance for your multilingual ASR needs. Figure 3 shows what the fine-tuning notebook looks like when launched on the Qubrid AI platform.

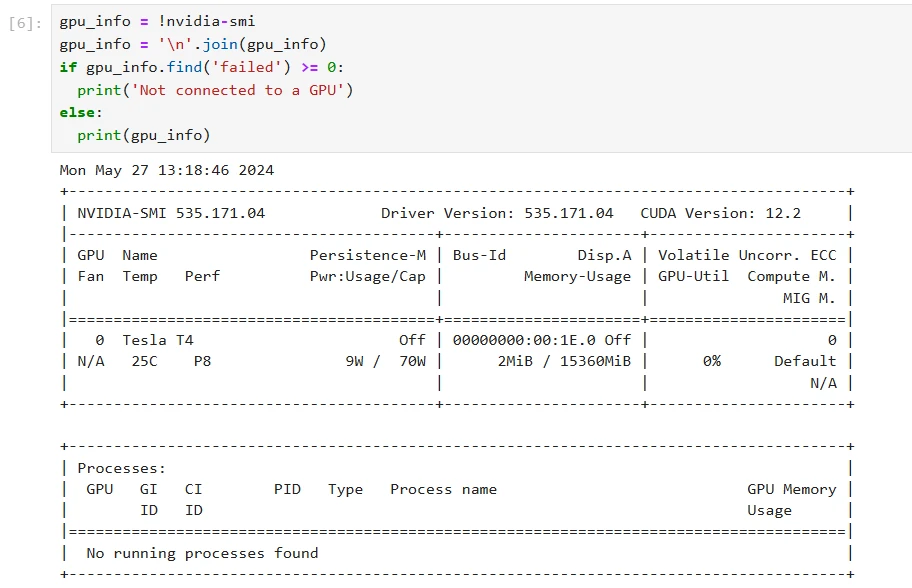

- Verify that you have a GPU for training the model

Ensure that your instance includes a GPU, which is crucial for efficiently training the model and significantly speeding up the fine-tuning process.

On the Qubrid AI Platform, you can access various types of GPUs such as T4, A10 , L4, and more, making it suitable for training different AI models.

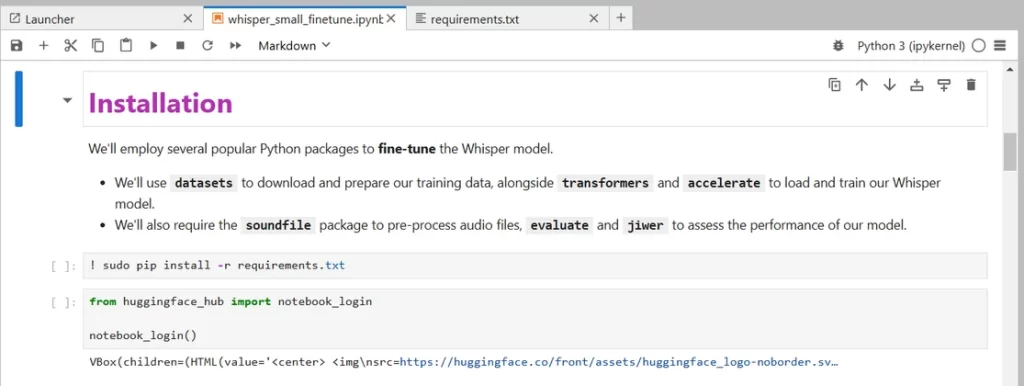

- Install the pre-requisite packages saved in the

requirements.txtfile.

Providing these pre-requisite packages directly to the user ensures a smooth setup process, allowing the environment to be ready for fine-tuning without additional configuration.

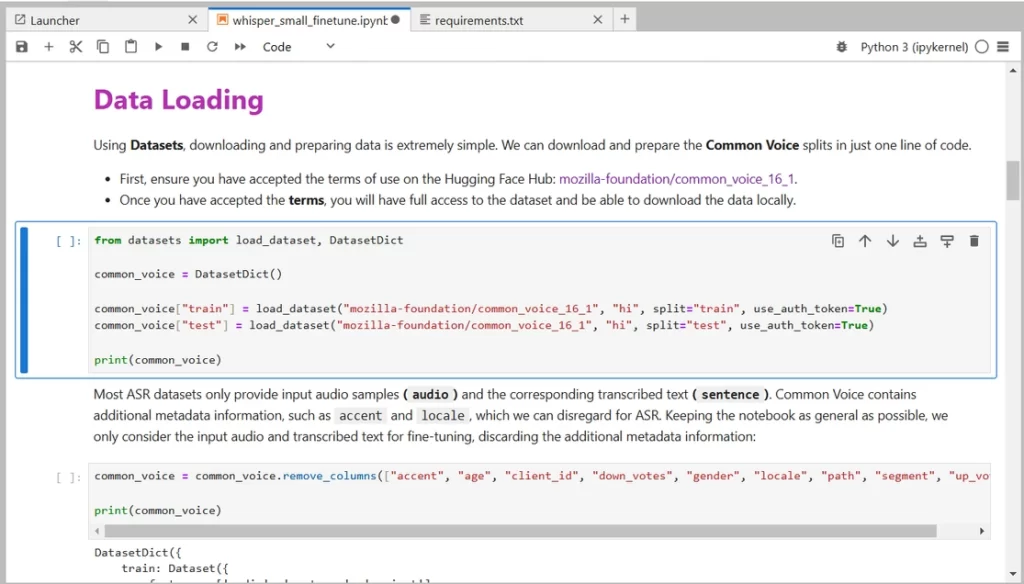

- Loading the Dataset

For fine-tuning the Whisper model, we will use the Common Voice dataset provided by the Mozilla Foundation. To access this dataset, you need to enable access through Hugging Face. This will allow you to seamlessly load and use the dataset for your training process.

Currently, we are using the Hindi language dataset, but you can select any language according to your task as Common Voice supports multilingual datasets for many languages.

- Preparing the Whisper Model and Tools

In this step, we will load the essential components required for fine-tuning the Whisper model: the Whisper feature extractor, Whisper tokenizer, and the evaluation metric, which is Word Error Rate (WER). Additionally, we will load the Whisper model itself.

All these steps are covered in the fine-tuning notebook for all the AI models. Access the notebook and the Whisper model here.

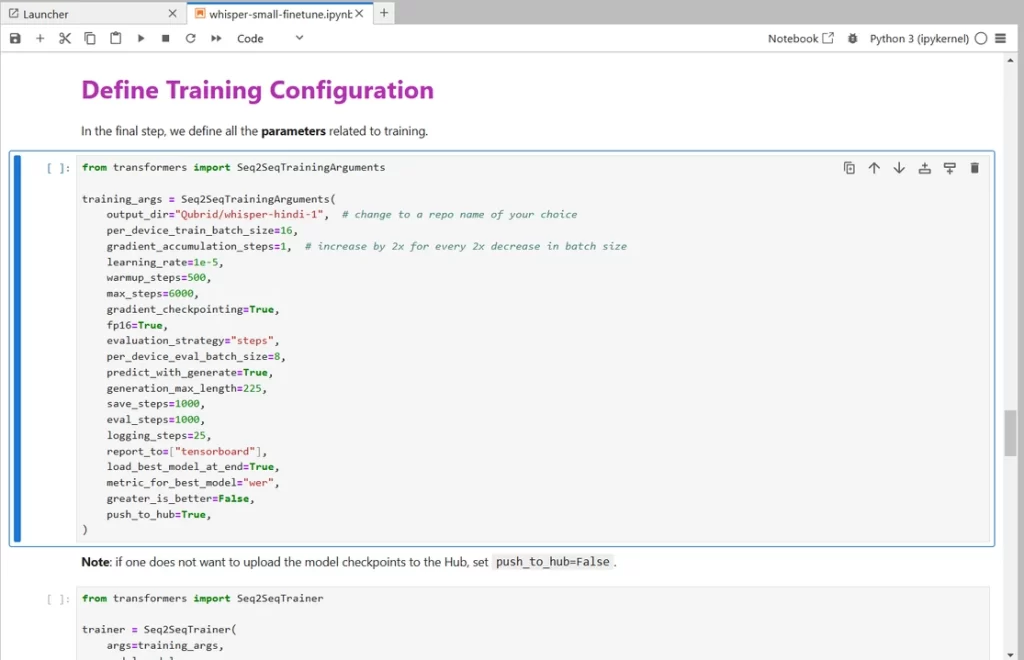

5. Defining the Training Configuration

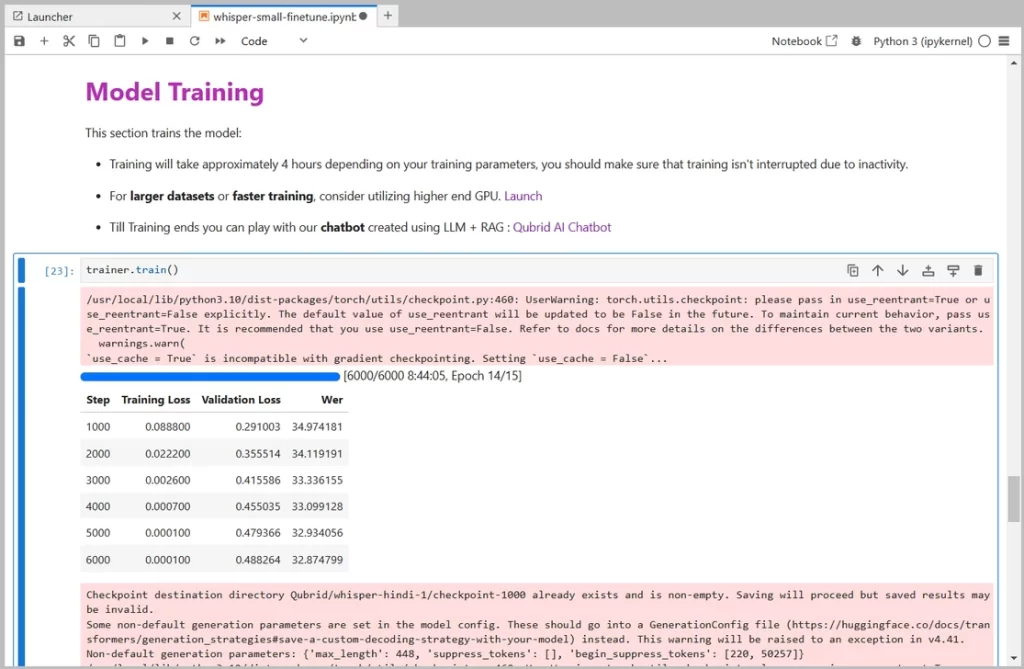

- Model Training

Training will take approximately 5-10 hours depending on your GPU. This training time may differ according to pre-trained model you select.

- Results :

The outcomes of fine-tuning on the Qubrid AI platform are truly remarkable. Utilizing the guideline notebook provided by Qubrid AI, our best Word Error Rate (WER) stands at an impressive 32.87% after just 6000 training steps. To put this into perspective, the pre-trained Whisper small model boasts a WER of 63.5%, indicating an extraordinary improvement of 30.7% absolute through fine-tuning alone. What’s even more astounding is that these exceptional results are achieved with just 8 hours of training data.

Furthermore, Qubrid AI offers users the flexibility to optimize their fine-tuning process by selecting from a range of GPU options. Whether it’s the entry-level GPU for those just starting their AI journey, the mid-range GPU option for balanced performance, or the high-performance GPU for cutting-edge projects, Qubrid AI ensures that users have the tools they need to achieve their desired outcomes efficiently and effectively.

Closing Remarks

In this blog, we covered a step-by-step guide on fine-tuning models (in this case whisper) using Qubrid AI Platform. If you’re interested in fine-tuning other AI models, like LLM or Stable Diffusion you are at right place, Check out Qubrid AI Hub right now!