Inferencing AI Models on Qubrid AI On-Prem and in Cloud

In the rapidly evolving world of artificial intelligence (AI), inferencing is a crucial process. It refers to the application of a trained AI model to new data to make predictions or decisions. However, deploying AI models, particularly for real-time applications, requires a focus on performance, scalability, and efficiency. This is where Qubrid AI, a platform designed for optimized edge computing, shines. In this blog, we will explore what inferencing is, why it matters, and how Qubrid AI enhances AI model inferencing at the edge.

What is Inferencing in AI?

Inferencing is the phase of AI where the trained model is deployed to make predictions on new data. For example, a model trained for image recognition might analyze a photo to classify it, or a recommendation system might suggest products based on a user’s preferences. Unlike model training, which is computationally intensive and often done in the cloud, inferencing needs to be fast, efficient, and low-latency, especially for use cases that demand real-time predictions, such as autonomous vehicles, industrial IoT, or healthcare.

The challenge arises when AI models, particularly complex deep learning models, need to run on edge devices, which are often limited in computational power, storage, and bandwidth. This is where edge inferencing becomes critical, allowing for faster predictions and reduced data transmission.

The Challenge of Inferencing AI Models

AI models, especially deep learning models, are resource-intensive. The challenge is to execute these models efficiently on devices with limited resources, such as smartphones, IoT sensors, or embedded systems. Qubrid AI enables inferencing on NVIDIA’s latest high performing GPUs such as H200, H100, L40S and others for fast results.

GPU Cloud and Appliance inferencing provides several benefits:

- Reduced Latency: Processing data on high end GPUs leads to quicker decision-making.

- Lower Bandwidth Usage: Local computation reduces the need for constant data transfer to the cloud, conserving bandwidth.

- Enhanced Privacy: Keeping sensitive data on local GPU appliances or restricted cloud tenants improves security and privacy.

- Energy Efficiency: by improving GPU utilization Qubrid AI helps save on energy spending during inferencing tasks.

How Qubrid AI Optimizes Inferencing

Qubrid AI makes inferencing at the edge and cloud fast, efficient, and scalable. It provides a platform that supports a wide range of AI models across various categories, enabling businesses to deploy real-time, low-latency AI applications. Here’s how Qubrid AI optimizes inferencing:

Model Categories Supported on Qubrid AI

Qubrid AI supports a wide variety of AI models across multiple categories, enabling real-time inferencing for various applications. Here are the key categories:

- Text Generation (LLMs): Run large language models like Falcon, Mistral, Google’s Gemma, Meta’s Llama, and Microsoft’s Phi-3 for advanced text generation tasks.

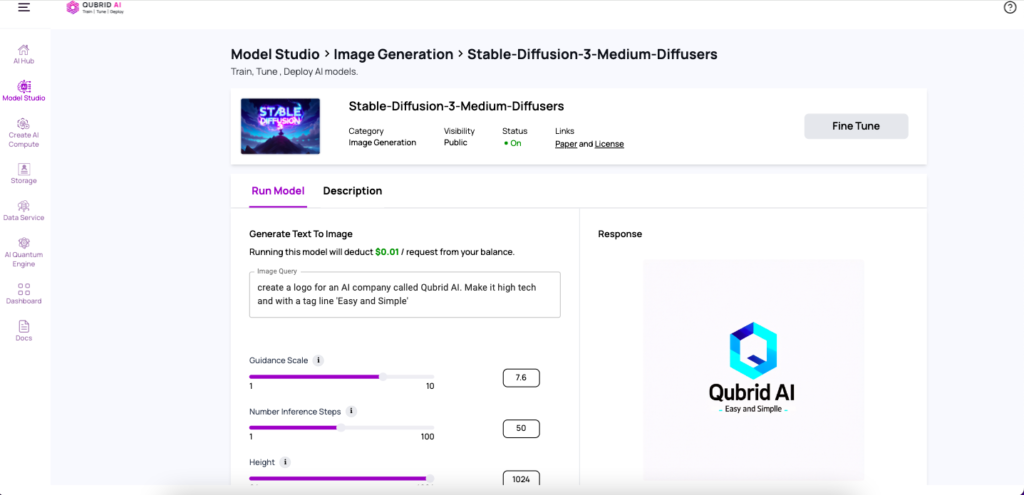

- Image Generation: Deploy Stability AI models for generating high-quality images from text prompts.

- Object Detection: Use models like YOLO for real-time object detection on edge devices.

- Text to 3D: Run OpenAI’s Shap-E to generate 3D objects from text descriptions.

- Text-to-Speech (Whisper models): Convert text into natural-sounding speech using advanced Whisper models.

- Image to Video: Use Animate Diff for transforming static images into dynamic video content.

- Vision-Language Models (Image-Text-to-Text): Leverage Microsoft’s and Meta’s vision-language models for tasks like image captioning and cross-modal reasoning.

- Code Generation: Deploy models that assist in generating code and automating programming tasks, streamlining development.

Qubrid AI ensures that these models run efficiently on cloud and on-prem server appliances, making it possible to leverage cutting-edge AI regardless of deployment infrastructure.

Use Cases of Inferencing AI Models with Qubrid AI

Qubrid AI’s inferencing capabilities are transforming industries by enabling real-time, intelligent decision-making. Here are some simple use cases:

- Generate Image

- Generate Document

- Generate Code

- Generate 3D Shapes

- Image Analysis

- Transcribe Speech

Inferencing AI models in cloud and on the edge is changing the way industries deploy and scale AI applications. With Qubrid AI, you can take advantage of cutting-edge AI models, ensuring low-latency, real-time decision-making across a wide range of devices and use cases. Whether for healthcare, autonomous systems, or industrial automation, by bringing AI to the cloud and edge, Qubrid AI is helping businesses unlock the true potential of intelligent, responsive, and scalable AI systems, driving innovation across industries.