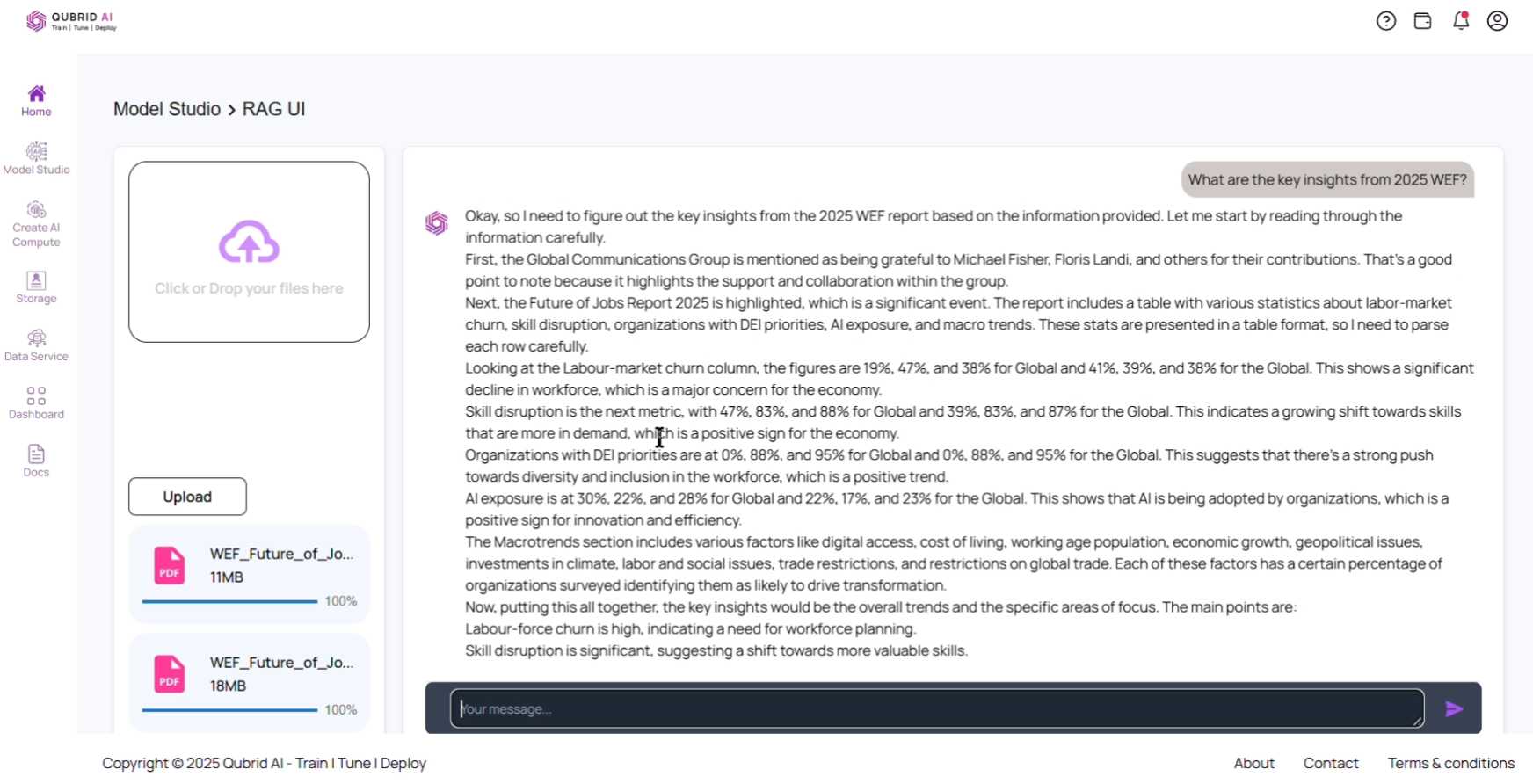

Interacting with RAG UI on Qubrid AI Platform

Introduction What is a RAG? Retrieval-Augmented Generation (RAG) is an AI technique that improves the performance of large language models (LLMs) by incorporating external knowledge sources into their responses. Rather than relying solely on static training data, RAG retrieves relevant, real-time, and domain-specific information from external databases or documents, enabling more accurate and contextually relevant […]

Interacting with RAG UI on Qubrid AI Platform Read More »