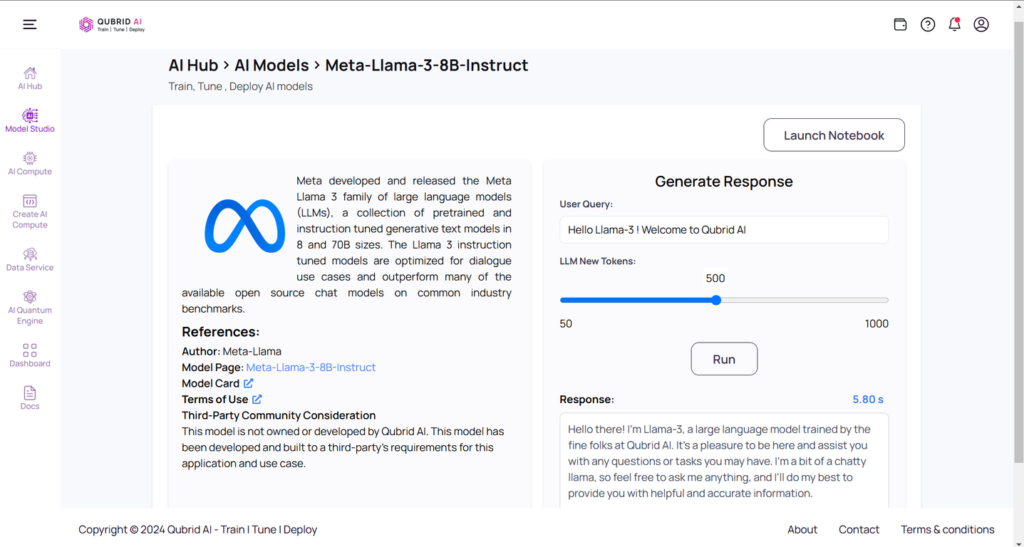

In this technical bog, we provide a comprehensive tutorial on fine-tuning the Llama-3 model, a large language model (LLM), using the Qubrid AI Platform. The platform features an AI Hub with various models, including Llama-3, where users can write a text prompt and receive stunning response from large language models, enhanced natural language understanding, and generation capabilities. This guide will explain the Llama-3 model, the Summary dataset, and the theory behind fine-tuning, complete with code examples for data preparation and fine-tuning steps. For those interested in fine-tuning the model, you can access the fine-tuning notebook directly from the platform. For a more detailed guide on navigating the Qubrid AI Platform, check out the Qubrid Platform Getting Started Guide.

In the image Figure 1, we demonstrate an inference using the Llama-3 model with a prompt. While the response creatively credits Qubrid AI for its training, it’s important to clarify that the Llama-3 model was actually developed and trained by Meta. This generated response is a prime example of the coherent and creative text that large language models like Llama-3 can produce, showcasing the impressive capabilities of these models. Qubrid AI provides a powerful platform for leveraging such advanced models, enabling users to explore and utilize cutting-edge AI technology effectively.

Introduction

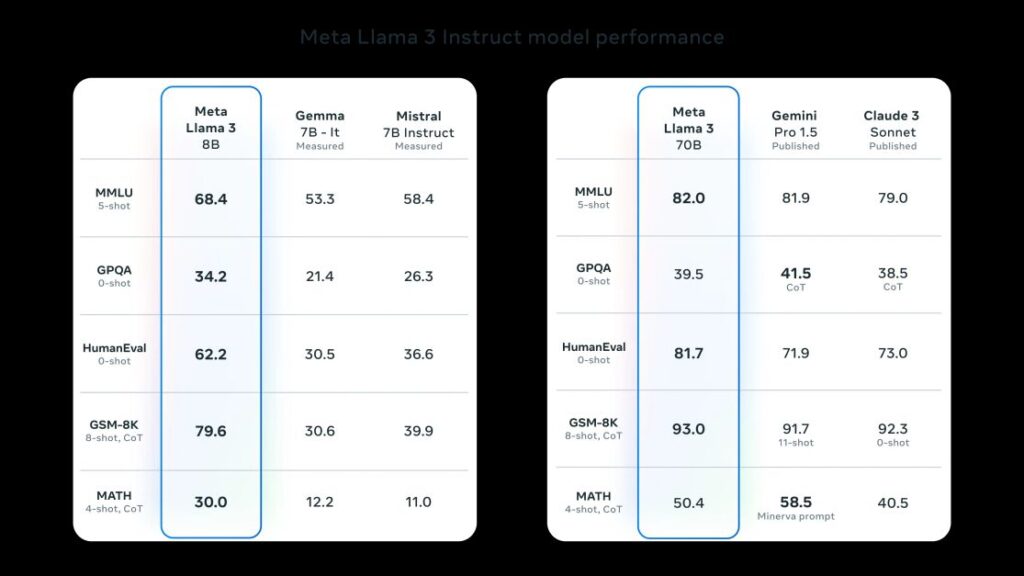

For our fine-tuning tutorial on the Qubrid AI platform, we have chosen to work with the Llama 3 8B Instruct model. The new 8B parameter Llama 3 models represent a significant advancement over their predecessors, Llama 2, setting a new standard for large language models (LLMs) at these scales. Thanks to enhancements in pretraining and post-training processes, the Llama 3 models offer superior performance, with substantial reductions in false refusal rates, improved alignment, and increased diversity in model responses. Additionally, the models exhibit enhanced capabilities in reasoning, code generation, and instruction following, making them more steerable. Meta’s development of Llama 3 included rigorous testing on standard benchmarks and real-world scenarios, using a high-quality human evaluation set comprising 1,800 prompts across 12 key use cases. By leveraging the Qubrid AI platform, users can fine-tune and harness the power of the Llama 3 8B Instruct model to achieve cutting-edge results in their specific tasks.

Figure source:Meta Llama 3

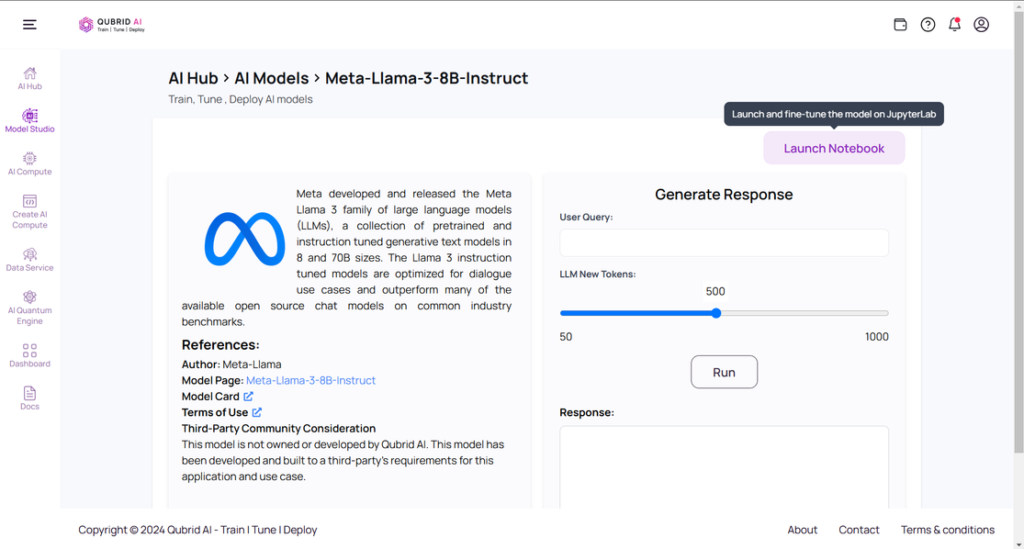

Fine-Tuning Llama-3 in a Jupyter Notebook

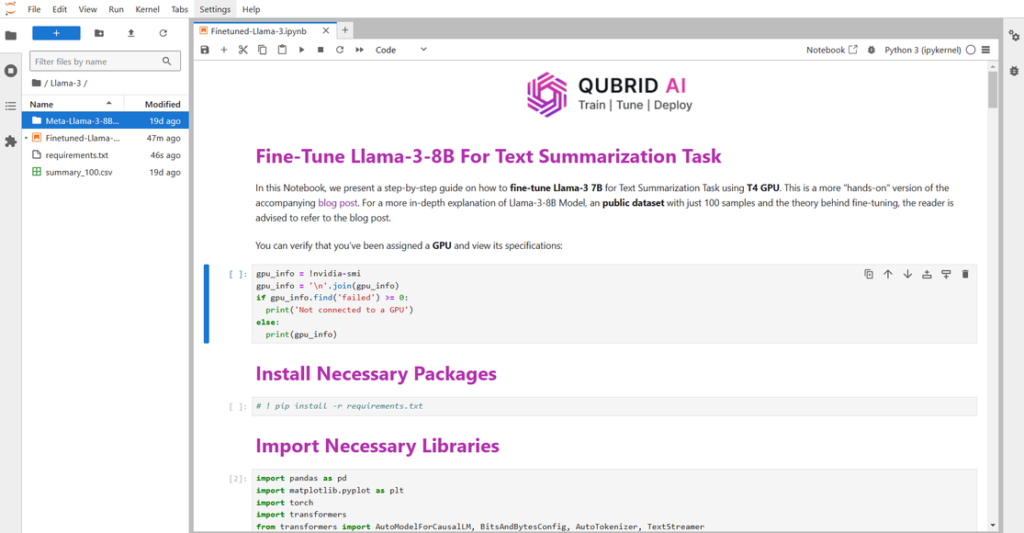

After launching the notebook on the Qubrid Platform, you will be presented with a JupyterLab environment equipped with several essential tools and resources to facilitate the fine-tuning process (see Figure 3).

Here’s what you will find inside Jupyter Lab:

- GPU for Training

- Pre-Trained Model

- Fine-Tuning Sample Notebook

- Pre-requisite Packages

By providing these tools and resources, the Qubrid AI Platform simplifies the process of fine-tuning the Llama-3 model, allowing you to focus on optimizing the model’s performance. Figure 4 shows what the fine-tuning notebook looks like when launched on the Qubrid AI platform.

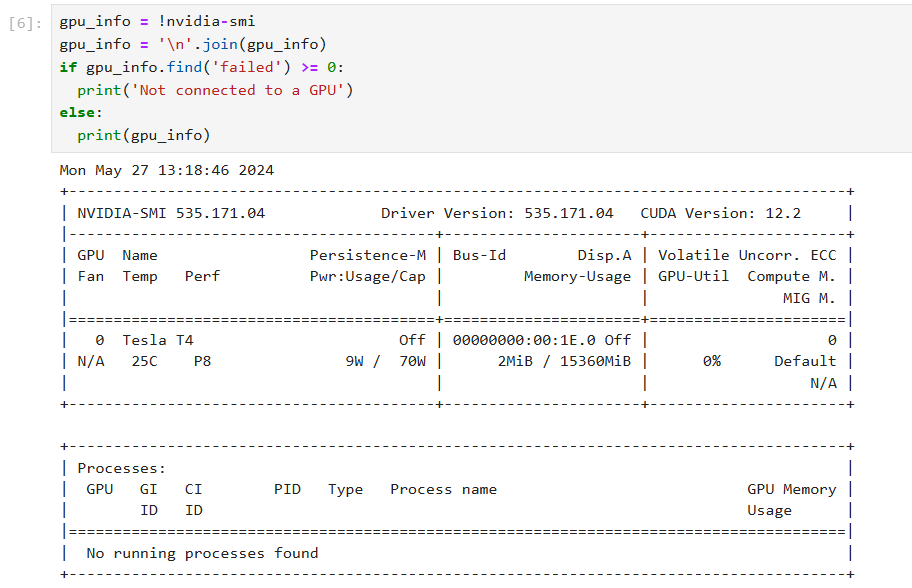

- Verify that you have a GPU for training the Llama-3 model.

Ensure that your instance includes a GPU, which is crucial for efficiently training the Llama-3 model and significantly speeding up the fine-tuning process. On the Qubrid AI Platform, you can access various types of GPUs such as T4, A10 G, L4, and more, making it suitable for training different AI models. Additionally, L40s and H100 GPUs will soon be available on the Qubrid AI platform.

2. Install the pre-requisite packages saved in therequirements.txt file.Providing these pre-requisite packages directly to the user ensures a smooth setup process shown in Figure 4 , allowing the environment to be ready for fine-tuning without additional configuration.

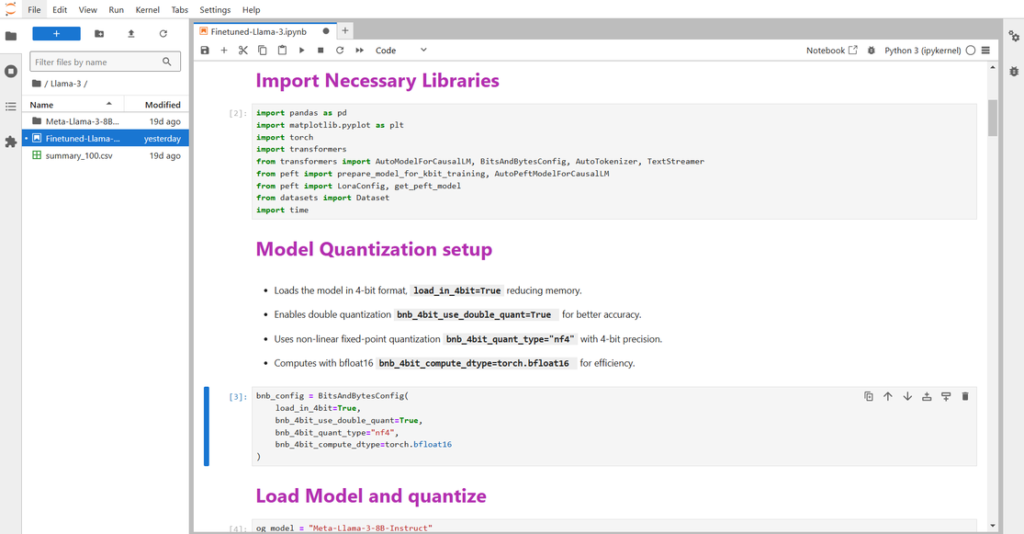

3. Importing packages and Model Quantization Setup.

To begin fine-tuning your first LLM, you’ll need to import several essential libraries for model quantization, data loading, and data preparation. All the necessary quantization steps are included in the notebook itself, making it accessible even for those who are new to training large language models. The notebook guides you through the entire process, ensuring you have everything you need to successfully fine-tune the Llama 3 8B Instruct model.

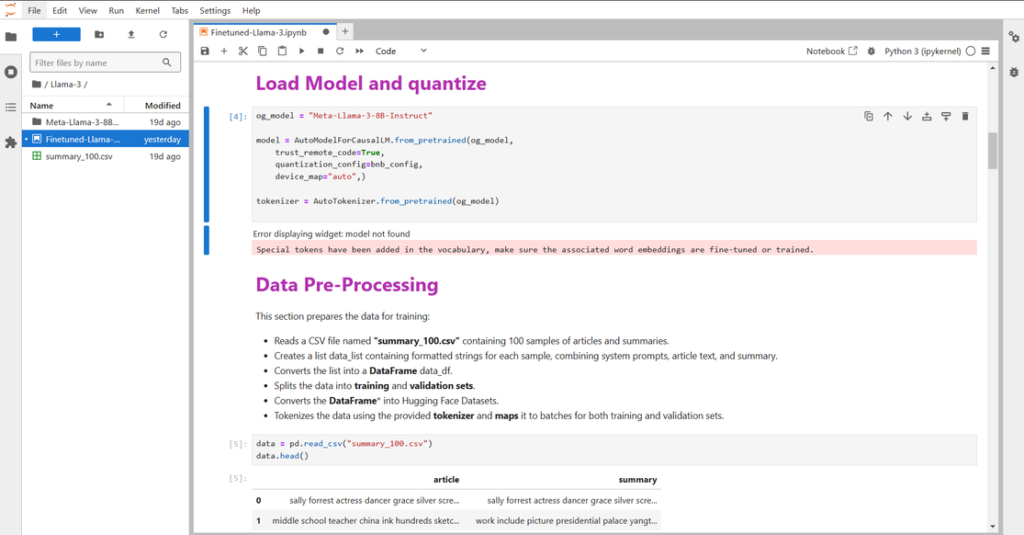

4. Loading the quantized model and the local dataset.

The next step involves loading the quantized model and the local dataset. Refer to the image below for a detailed explanation (Figure 7).

5. Data Preprocessing and Model preparation.

Next in the workflow is data preprocessing and model preparation. Here the data is processed into a structured format suitable for training, with articles and summaries formatted for input into the model. The dataset is split into training and validation sets. The model is configured for knowledge-based incremental training (KBIT), enabling efficient training with reduced memory requirements. These steps ensure that the model is ready for fine-tuning and optimization on your specific tasks. All these steps are covered in the fine-tuning notebook. Access the notebook and the Meta Llama-3-8B model here.

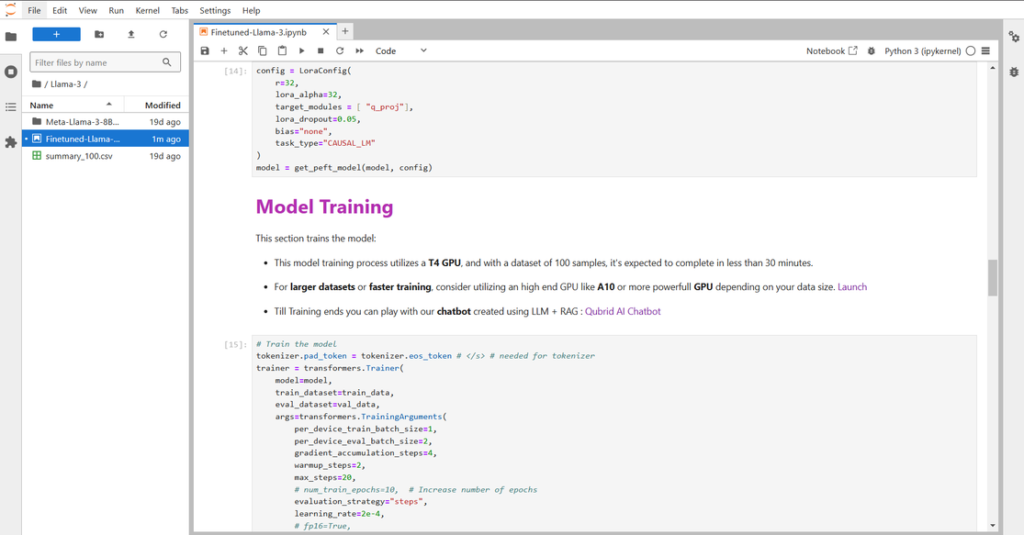

6. Model Training Configuration.

This model training process utilizes a T4 GPU, and with a dataset of 100 samples, it’s expected to complete in less than 30 minutes.

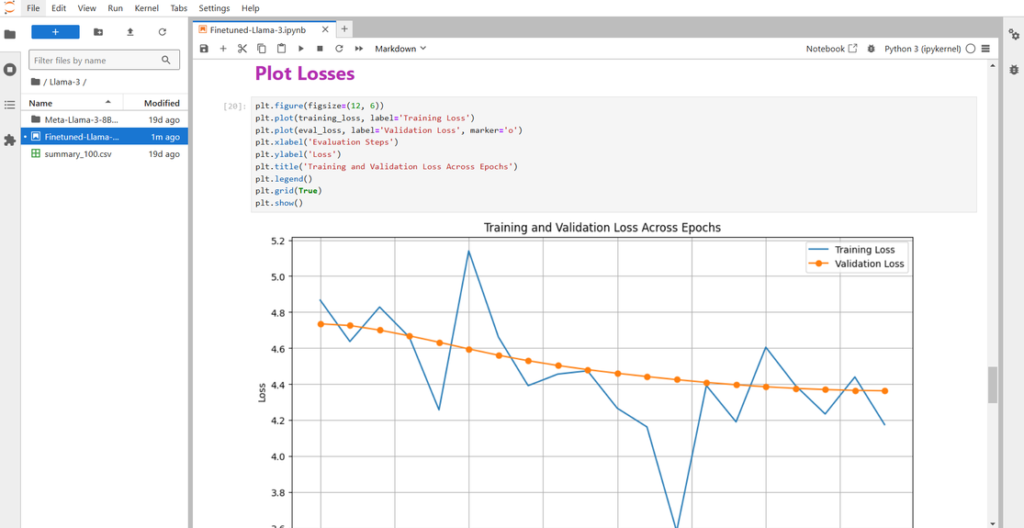

7. Model Training Results.

In the notebook, Qubrid AI also provides a graphical representation of training and evaluation loss throughout the training process. This graph allows users to monitor the performance and convergence of the Llama-3 8B Instruct model in real-time shown in Figure 9. It serves as a valuable tool for assessing the effectiveness of fine-tuning and making adjustments as needed to achieve optimal results.

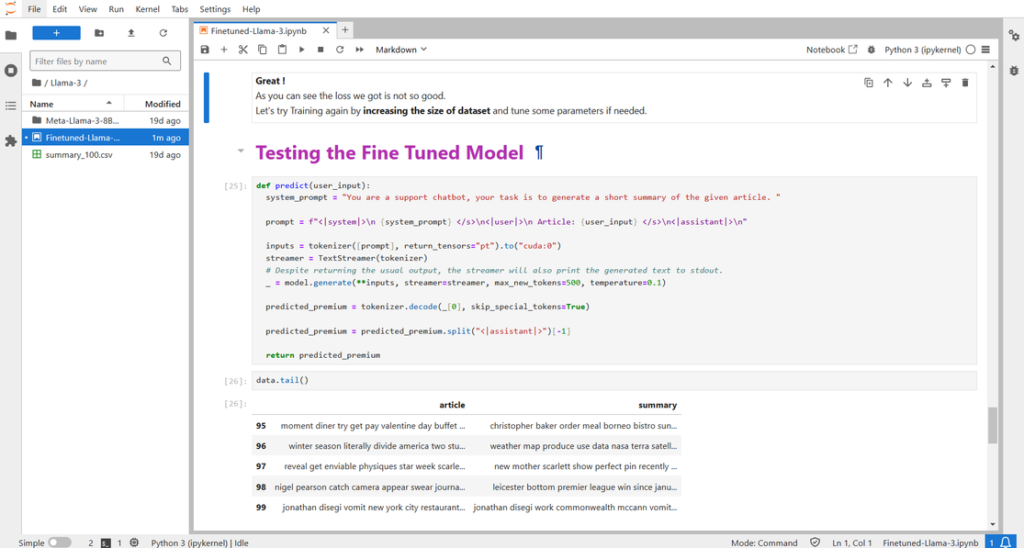

8. Test the Fine-Tuned Model

You can test your fine-tuned model and re-train it if you find that it does not meet your expectations (Figure 10).

9. Save your Fine-Tuned Model

Closing Remarks

In this blog, we covered a step-by-step guide on fine-tuning Meta Llama-3-8B Instruct for summary task using Qubrid AI Platform. If you’re interested in fine-tuning other AI models, like LLM, Text-2-Image or Speech Recognition be sure to check out AI Hub .