Fine-tuning a large language model (LLM) or Stable Diffusion is the process of adjusting its parameters to perform better on a specific task or within a particular domain. While pre-trained models like GPT are great at general language understanding, they may not be as effective when applied to specialized fields. Fine-tuning helps make these models more accurate for specific tasks by training them on domain-specific data.

Why we need Fine-Tune ?

- Customization

Imagine you have a robot that can do a lot of things, like cleaning and cooking. But if you want it to make your favorite sandwich, it might not know how. You can teach the robot exactly how to make the sandwich you love! This is like fine-tuning—you train the robot to do things the way you like. - Data Compliance

Let’s say you have a secret recipe for cookies that only your family knows. If you tell the robot this recipe, you want it to keep it safe and not share it with anyone else. Fine-tuning helps the robot learn how to handle important information without letting others know, like keeping your secret recipe safe. - Limited Data

Sometimes, you don’t have a lot of examples to teach the robot. But even with just a few examples, the robot can still learn! Fine-tuning helps the robot get smarter, even when you only give it a little bit of information to practice with.

In short, fine-tuning is like training a helper to do exactly what you need, keeping secrets safe, and learning even with fewer tools. It makes the model more useful and precise for your specific tasks.

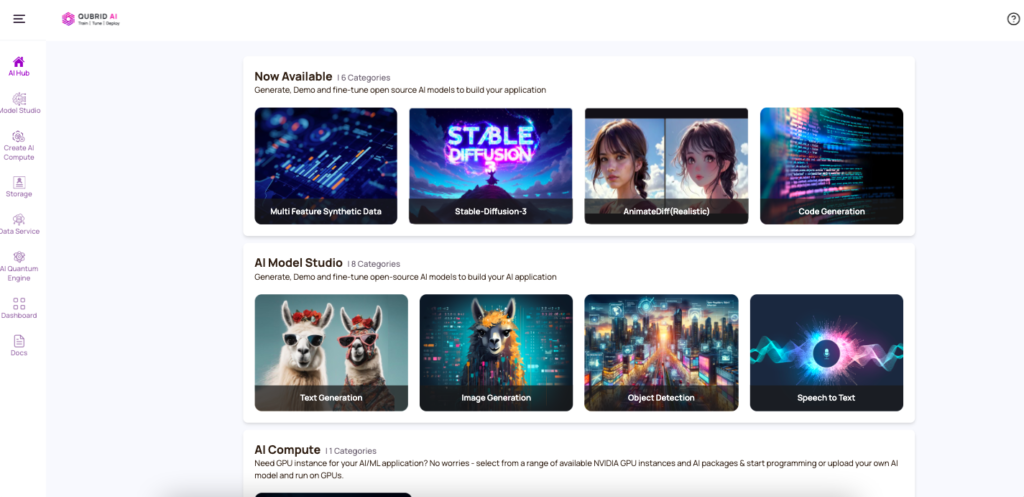

Fine-Tuning Methods Solutions by Qubrid AI

Below are some of the advanced fine-tuning methods used by Qubrid AI to enhance and personalize models efficiently.

1. Fine-Tuning Methods for LLMs

LoRA

Low-Rank Adaptation (LoRA) method is a fine-tuning method introduced by a team of Microsoft researchers in 2021. LORA has extended the idea which is quoted in this paper to one level further.

LORA is designed to fine-tune large-scale models efficiently by targeting a small subset of the model’s weights that have the most significant impact on the task at hand. This contrasts with traditional fine-tuning, where many more weights might be updated. LORA achieves this by:

- Tracking changes to weights instead of updating them directly.

- Decomposing large matrices of weight changes into smaller matrices that contain the “trainable parameters.”

2. Fine-Tuning Methods for Stable Diffusion

LoRA

LoRA (Low-Rank Adaptation) is an efficient fine-tuning method that updates only small parts of a model instead of the entire network. This makes it much faster and less resource-intensive compared to traditional fine-tuning. LoRA is ideal for tasks that require rapid adaptation while maintaining high performance without needing extensive computational resources.

DreamBooth

DreamBooth is a powerful technique for fine-tuning models, enabling them to generate highly personalized images based on specific subjects (like a person or object). While DreamBooth achieves impressive results, it requires substantial computational power since it updates the entire model to embed new concepts. This method is perfect when detailed, subject-specific customization is needed.

DreamBooth + LoRA

When you combine DreamBooth with LoRA, we’re essentially using DreamBooth to teach the model about a specific subject, but instead of updating the whole model (which can be slow and expensive), you apply LoRA to adjust only specific layers. This gives you the benefit of DreamBooth’s subject-specific fine-tuning while keeping the process more efficient, as LoRA reduces the computational overhead.

Fine-tuning best practices

Choose and use the right pre-trained model:

Using pre-trained models for fine-tuning large language models is crucial because it leverages knowledge acquired from vast amounts of data, ensuring that the model doesn’t start learning from scratch. This approach is both computationally efficient and time-saving. Additionally, pre-training captures general language understanding, allowing fine-tuning to focus on domain-specific nuances, often resulting in better model performance in specialized tasks.

Select the right dataset:

The dataset used for fine-tuning is just as important as the model itself. It’s essential to choose or curate a dataset that is relevant to the task at hand and represents the specific domain or topic well. High-quality, diverse, and balanced datasets lead to more effective fine-tuning, reducing biases and improving the model’s ability to generalize to unseen data.

Set hyperparameters:

Hyperparameters are tunable variables that play a key role in the model training process. Learning rate, batch size, number of epochs, weight decay, and other parameters are the key hyperparameters to adjust that find the optimal configuration for your task.

Evaluate model performance:

Once fine-tuning is complete, the model’s performance is assessed on the test set. This provides an unbiased evaluation of how well the model is expected to perform on unseen data. Consider also iteratively refining the model if it still has potential for improvement.

Key takeaways

Fine-tuning is essential for both large language models (LLMs) and Stable Diffusion, turning them into specialized tools for enterprises. While LLMs understand language broadly and Stable Diffusion generates general images, fine-tuning hones these models to handle specific tasks and produce precise outputs. By training them on niche topics or custom data, we unlock their full potential. As fine-tuning evolves, it will lead to smarter, more efficient, and context-aware AI systems, benefiting industries from automation to creativity.