RAG (Retrieval-Augmented Generation) is a framework often used in AI to improve the performance and utility of large language models (LLMs). It combines the power of retrieval systems with generative models to create more accurate, informed, and contextually relevant outputs. Qubrid AI has simplified the implementation and use of RAG.

RAG incorporates two main components:

- Retriever: A retrieval system fetches relevant information from external knowledge sources, such as databases, documents, or the web.

- Generator: A generative model processes the retrieved information and uses it to generate a response or output.

This combination allows the model to access external knowledge in real time, avoiding the limitations of a static training dataset.

Use Cases

- Question Answering: RAG can be used to provide precise and up-to-date answers to user queries by retrieving relevant documents and generating responses based on them.

- Personalized Recommendations: It can retrieve user-specific data or preferences and generate recommendations tailored to the individual.

- Document Summarization: When summarizing documents, the retriever fetches relevant sections, and the generator creates a concise summary.

- Enterprise Knowledge Management: Organizations can use RAG to access and summarize internal knowledge bases for tasks like customer support or employee onboarding.

- Fact-Checking: RAG models can retrieve evidence from trusted sources and generate outputs that are grounded in factual data.

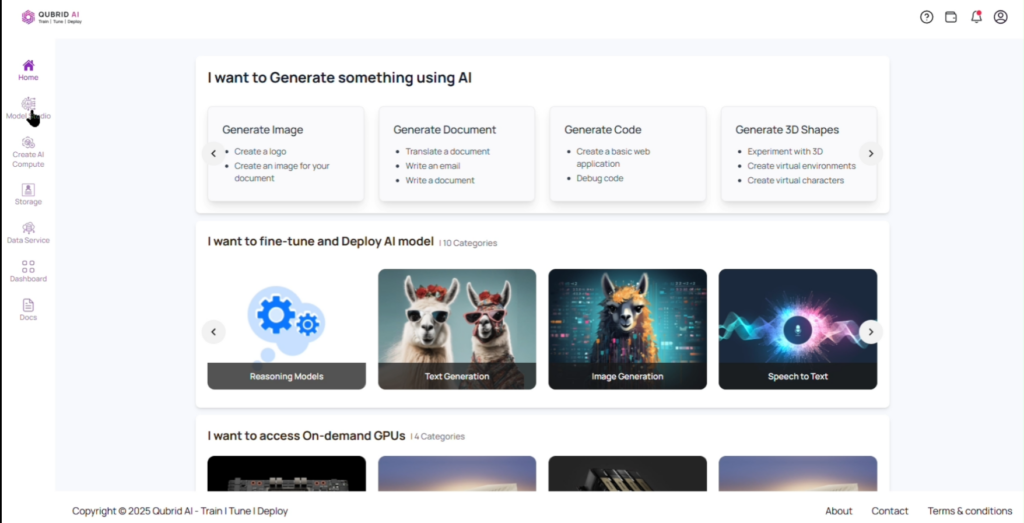

RAG on Qubrid AI Cloud and On-Prem Platform

This guide walks you through how to interact with Retrieval-Augmented Generation (RAG) models on the Qubrid AI platform. The RAG UI feature allows users to upload PDFs and engage in intelligent, context-aware conversations with their content.

Steps to Interact with RAG UI Models

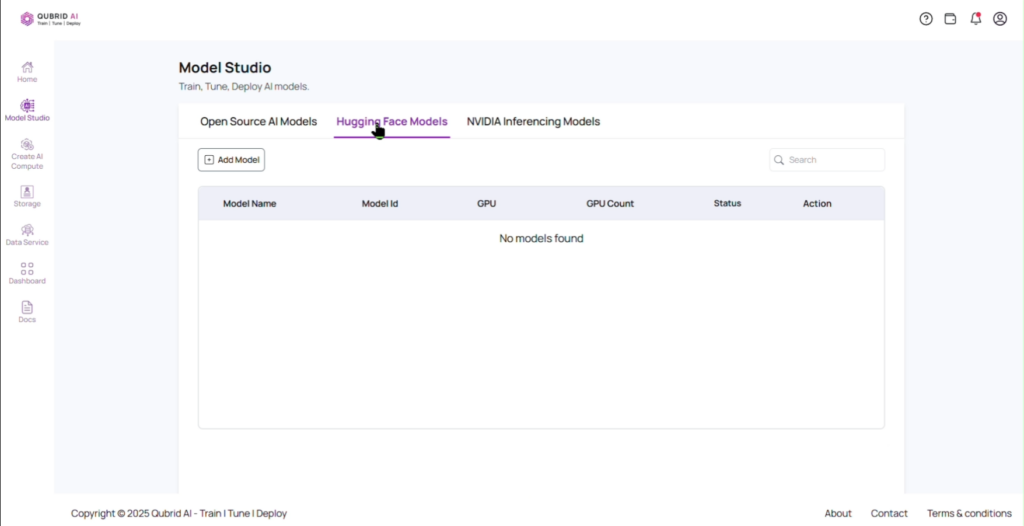

- Deploying Hugging Face Model

1.a. Navigate to the Model Studio on Qubrid AI.

1.b. Click on Hugging Face Models and deploy your model.

- Note: For more information on how to deploy a Hugging Face model on Qubrid AI, visit: Deploying Hugging Face Models on Qubrid AI Platform

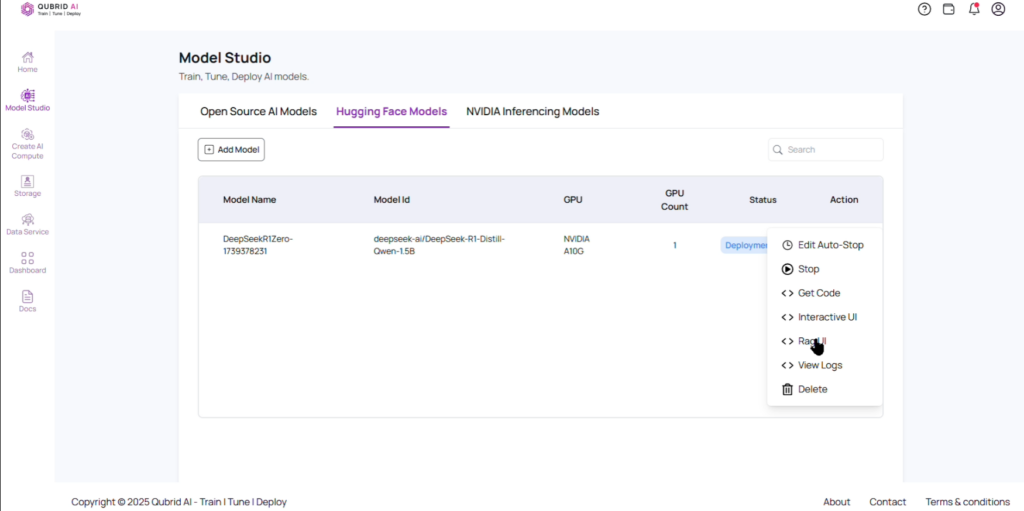

- Navigate to RAG UI

2.a. Once the model is deployed, go to Actions and select RAG UI.

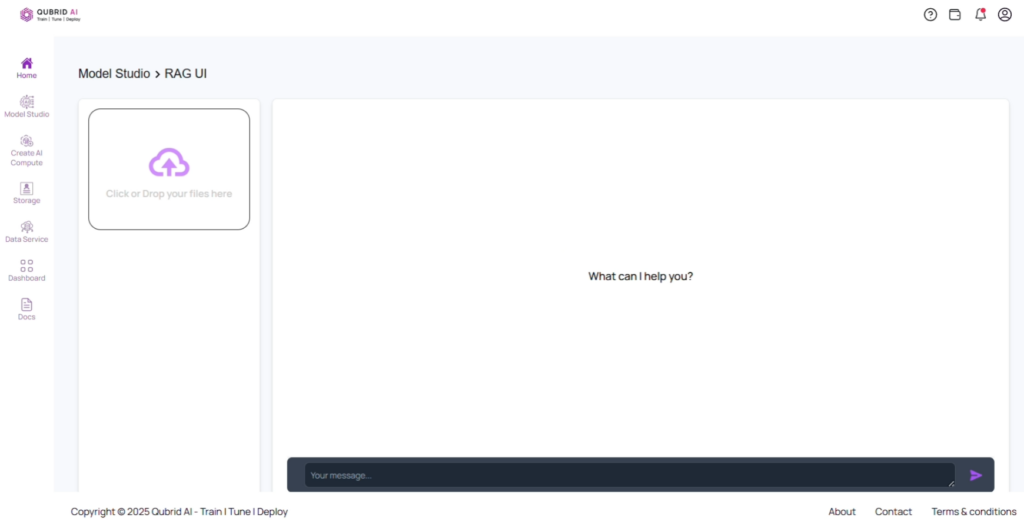

- Interact with RAG UI

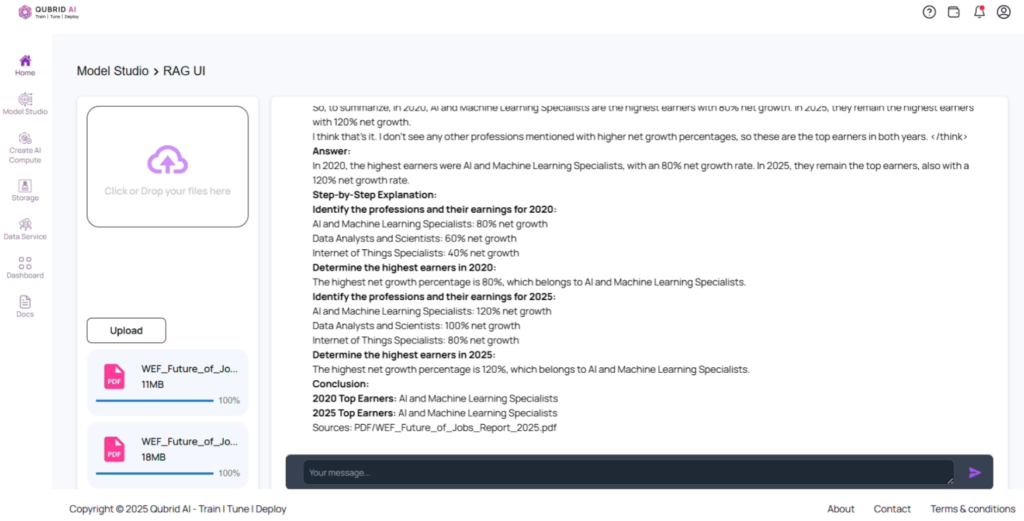

3.a. Click on the Upload PDF button.

3.b. Select the document(s) you want to interact with and click on upload. Wait for the system to process and index the document.

3.c. Use the chat interface to ask questions related to the content of the uploaded document. The RAG model will retrieve relevant information and generate accurate responses based on the uploaded content.

Interacting with RAG UI – Complete Guide

Example Use Case

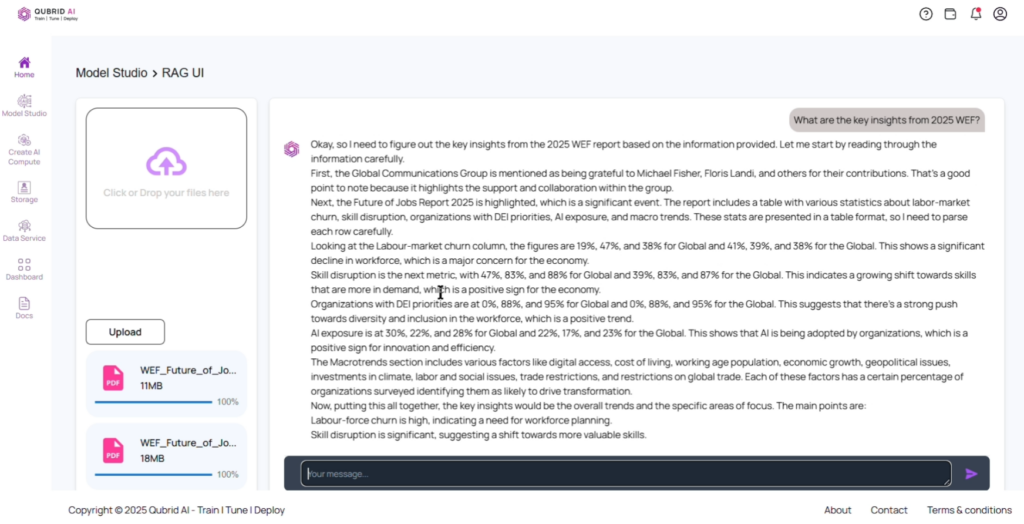

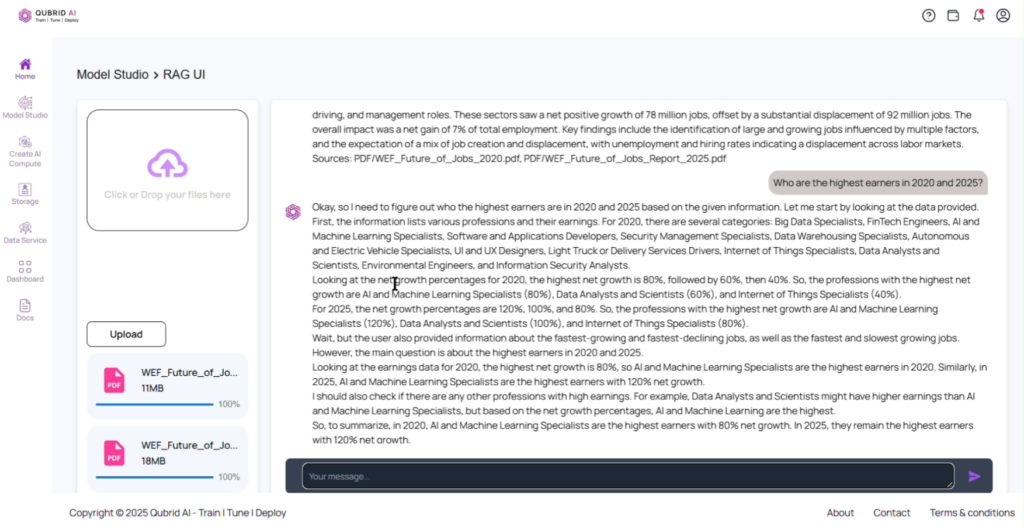

For instance, after uploading the two World Economic Forum reports from 2020 and 2025:

- WEF Future of Jobs Report 2025 (290 Pages)

- WEF Future of Jobs Report 2020 (163 Pages)

The RAG model was able to compare the documents. For the prompt “Who are the highest earners in 2020 and 2025?”, the model successfully analyzed both reports and provided a clear response with citations.

This highlights RAG’s ability to improve AI-driven interactions by providing precise, context-aware, and well-supported responses.

Use Case – Complete Guide